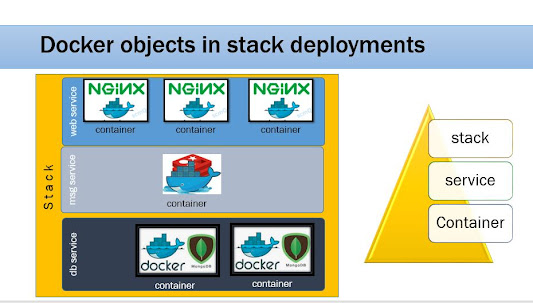

Docker Service Stack deployment

To work with Docker service stack deployment we should have an orchestrator either Swarm or Kubernetes. Here, in this post, I will be experimenting with the Docker swarm cluster. Prerequisites Docker installed machine Docker swarm initialized and active Create the manifestation file that describes the task, which will be available in the service definition. We can use the declarative definitions for each service in a YAML file. Docker Swarm Service Stack Deployment I read the book: Learn Docker - Fundamentals of Docker 19.x , where I found the nice explanation given in the Let's run the docker swarm visualizer container, which will show the Swarm cluster node status, container service status it will be very clear to understand how the orchestrator capabilities work for Docker containers. docker run -it –d \ -p 8080:8080 \ -v /var/run/docker.sock:/var/run/docker.sock \ dockersamples/visualizer Alternatively, you can use the docker-compose file a...