Kubernetes cluster configuration in a Virtualbox with vagrant

Thanks to Rajkumar who had developed the Vagrantfile and published in the github on Kubernetes cluster configuration in a Virtualbox with vagrant. For those who don't know about Vagrant it is a tool that will be used for virtualization into a different level and more powerful way of using your system resources to run multiple operating virtual boxes in your Laptop/Desktop systems.

You just need to follow the simple steps which I had done in my experiment:

Prerequisites for Kubernetes Cluster Creation

The Vagrantfile will be composed with the Ruby array that creates k8s-head and k8s-node1, k8s-node2 definitions. Once the Ubuntu Xenial boxes provisioned custom shell scripts are used for boot time execution.

check the VM are created as expected

Check that all are in running state, if not you need to check the log file that is generated in the same path where Vagrantfile exists.

Connect with your PuTTY to k8s-master that is running on 192.168.33.10 IP address.

Check the versions of kubeadm, kubectl, and kubelet

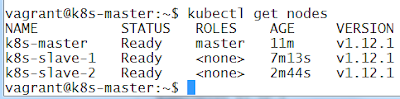

Check the nodes list

Note: Make sure that your Windows firewall disabled to run the Vagrant on your Windows laptop.

You might be more interested to explore and know about the latest Docker 19 Community Edition learning experiments on Ubuntu 19.04

You just need to follow the simple steps which I had done in my experiment:

Prerequisites for Kubernetes Cluster Creation

System resources requirements on VirtualBox

- 2 GB for each node

- 2 cores CPUs for each node

Here I have don this expeiment on my Windows 7 laptop. You could do same on any Windows higher version as well. Total 3 VMs will be created under a group named as - "Kubernetes Cluster" as defined in Vagrantfile.

Infrastructure as a Code: Vagrantfile

The Vagrantfile will be composed with the Ruby array that creates k8s-head and k8s-node1, k8s-node2 definitions. Once the Ubuntu Xenial boxes provisioned custom shell scripts are used for boot time execution.

- Both Master, Slave nodes common tasks are executed with the Shell provisioning inline options.

- Install Docker CE 17.03

- Added vagrant user to docker group to run docker commands as vagrant user (without using sudo for each not required)

- Install the kubelet kubeadm kubectlk

- kubelet requires swap off

You can do all the setups required to run the following in the sequence :

- k8s-master node runs on 192.168.33.10

- k8s-slave1 node runs on 192.168.33.11

- k8s-slave2 node runs on 192.168.33.12

Bootstrap Setup

Master node will be required the following steps

Slave node will be running and after bootup only runs inline joining the kubernetes cluster with a script generated in the master. node.

Executing the setup

vagrant up

vagrant status

|

| Vagrant status of kuberenetes cluster |

Connect with your PuTTY to k8s-master that is running on 192.168.33.10 IP address.

Check the versions of kubeadm, kubectl, and kubelet

kubectl version kubeadm version # Better format output kubectl version -o yaml kubeadm version -o yaml

|

| Kubeadm, kubectl, kubelet versions |

kubectl get nodes

|

| kubectl get nodes output |

Note: Make sure that your Windows firewall disabled to run the Vagrant on your Windows laptop.

You might be more interested to explore and know about the latest Docker 19 Community Edition learning experiments on Ubuntu 19.04

References:

Comments

I just followed your procedure & with in 30 min , Kubernetes Cluster was opearional.

Infact , it's "A Practical Guide , Hands on tutorial Kubernetes Cluster" gives remarkable insider's story of Kubernetes.

Great inspiration today!!! thanks for your blog.

android Training in Chennai

Python Training in Chennai

Best Spoken English Classes in Chennai

Java Training in Chennai

Spoken English Classes in Chennai

Spoken English in Chennai

vagrant@k8s-master:~$ kubectl describe no k8s-master | grep -i taint

Taints: node.kubernetes.io/not-ready:NoSchedule

vagrant@k8s-master:~$ kubectl describe no k8s-slave-1 | grep -i taint

Taints: node.kubernetes.io/not-ready:NoSchedule

vagrant@k8s-master:~$ kubectl describe no k8s-slave-2 | grep -i taint

Taints: node.kubernetes.io/not-ready:NoSchedule

vagrant@k8s-master:~$

Docker and Kubernetes Training

Kubernetes Online Training

Docker Online Training

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 41m v1.19.2

k8s-slave-1 NotReady 30m v1.19.2

k8s-slave-2 NotReady 22m v1.19.2

How can this be fixed?

"runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialize"