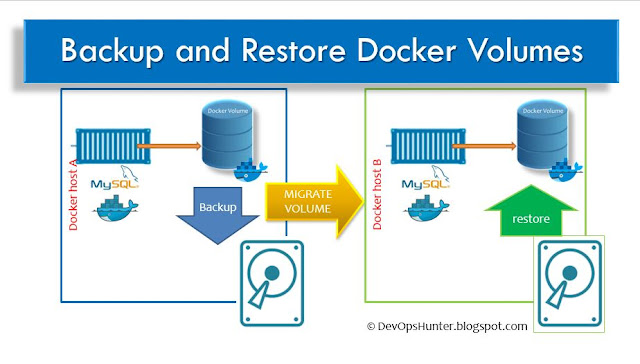

Backup and Recovery, Migrate Persisted Volumes of Docker

Namaste !! Dear DevOps enthusiastic, In this article I would like to share the experimenting of data that is persistence which we learned in an earlier blog post.

In real world these data volumes need to be backed up regularly and if in case of any disaster we can restore the data from these backups from such volumes

|

| Docker Volumes Backup and Restore |

This story can happen in any real-time project, The data from the on-premises data center to migrate to a cloud platform. One more option is when Database servers backup and restore to different Private networks on a cloud platform.

create a database named as 'trainingdb' mysql prompt in the same container

Now check the database which is created using show command, then create the TABLE name as traininings_tbl with required fields and their respective datatypes: Now insert the rows into the training_tlb table, here you can imagine any project table can be used with UI as this is an experiment we are entering manually entering records:

Step 5: Taking the backup

Let me copy to the share folder in the vagrant box so that it can be accessable on the another vagrant box:

Now the exciting part of this experiment is here...

check the other terminal in the /var/lib/mysql folder will be filled with extracted database files.

Once you see the data is restored this approach can be useful to remote machine then it is the migration strategy for docker volumes.

Step 8: Cleanup of Volumes -- optional

You can use the following command to delete a single data volume

To delete all unused data volumes using the following command

Create db on My SQL Crate table insert MySQL database password recovery or restart

Here I've tested this use-case on two Vagrant VirtualBox's. One used for backup and other for restore MySQL container.

Step 4: Log in to the MYSQL database within the My SQL database container

Setting up the Docker MySQL container with Volume

Step 1: Let's create a volume with a name as first_vol:

docker volume create first_vol #create

docker volume ls #Confirm

Step 2: create a container with volume for backup of My SQL Database image

docker run -dti --name c4bkp --env MYSQL_ROOT_PASSWORD=welcome1 \ --volume first_vol:/var/lib/mysql mysql:5.7Step 3: Enter into the c4bkp container

docker exec -it c4bkp bash

Create data in MySQL database

mysql -u root -p password:Enter the password as per the environment variable that is defined at the time of container, key pair

CREATE DATABASE trainingdb; use trainingdb;

SHOW CREATE DATABASE trainingdb; create table trainings_tbl( training_id INT NOT NULL AUTO_INCREMENT, training_title VARCHAR(100) NOT NULL, training_author VARCHAR(40) NOT NULL, submission_date DATE, PRIMARY KEY ( training_id ) );

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Docker Foundation", "Pavan Devarakonda", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("AWS", "Viswasri", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("DevOps", "Jyotna", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Kubernetes", "Ismail", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Jenkins", "sanjeev", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Ansible", "Pranavsai", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("AWS DevOps", "Shammi", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("AWS DevOps", "Srinivas", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Azure DevOps", "Rajshekhar", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Middleware DevOps", "Melivin", NOW());

INSERT INTO trainings_tbl(training_title, training_author, submission_date) VALUES("Automation experiment", "Vignesh", NOW());

commit;

After storing a couple of records into the table, we are good to go for testing with the persistent data.

Check the table data using 'SELECT' query: select * from trainings_tbl;

Now let's exit from the container. docker run --rm --volumes-from c4bkp -v $(pwd):/backup alpine tar czvf /backup/training_bkp1.tar.gz /var/lib/mysql/trainingdb

or

docker run --rm --volumes-from c4bkp -v $(pwd):/backup ubuntu tar czvf /backup/training_bkp.tar.gz /var/lib/mysql/trainingdb

ls -l *gz

Here the most important option is --volumes-from it should point to the mysql container. The backup file content we can check with here t indicates test the .gz file.

tar tzvf training_bkp.tar.gz

Migrate Data

Step 6: The data in the tar.gz file as backup which can be migrated/moved to another docker host machine where you wish to restore the data with the same mount location of the container from which image you have created the backup. Example source machine used mysql:5.7 image same should be used on the destination machine.

Let me copy to the share folder in the vagrant box so that it can be accessable on the another vagrant box:

cp training_bkp1.tar.gz /vagrant/

Restore from the Backup

Step 7: Create a new container and restore the old container volume database Now you cna open two terminals run the following 'restore' volume to run the 'for_restore' container:docker run -dti --name for_restore --env MYSQL_ROOT_PASSWORD=welcome1 \ --volume restore:/var/lib/mysql mysql:5.7 #check inside container volume location docker exec -it for_restore bash -c "ls -l /var/lib/mysql"To restore the data from the backup tar file which we have already taken from first_vol volume.

docker run --rm --volumes-from for_restore \ -v $(pwd):/restore ubuntu bash -c "cd /var/lib/mysql/ && tar xzvf /restore/training_bkp.tar.gz --strip-components=3"I've googled to understand the --strip-components how it helps us in extracting only specified sub-directory content.

Now the exciting part of this experiment is here...

check the other terminal in the /var/lib/mysql folder will be filled with extracted database files.

Once you see the data is restored this approach can be useful to remote machine then it is the migration strategy for docker volumes.

docker volume rm datavol

To delete all unused data volumes using the following command

docker volume prune

Comments