Docker Networking

Hello, dear DevOps enthusiast, welcome back to DevOpsHunter learners site! In this post, we would like to explore the docker networking models and different types of network drivers and their benefits.

To explore docker network object related commands you can use :

|

| What is Docker Networking? |

Understanding docker networking

Docker provided multiple network drivers plugin installed as part of Library along with Docker installation. You have choice and flexibilities. Basically the classification happen based on the number of host participation. SinbleHost WILLLet's see the types of network drivers in docker ecosystem.

Docker Contianers are aim to built tiny size so there may be some of the regular network commands may not be available. We need to install them inside containers.

|

| docker network overview |

Docker Contianers are aim to built tiny size so there may be some of the regular network commands may not be available. We need to install them inside containers.

Issue #1 Inside my container the Linux network commands 'ip' or 'ifconfig' not working, How can I install them?

Solution: In side your container, You need to install network binary libraries to have these commands, If we have 'Ubuntu' container then use the following install command:apt update; apt install -y iproute2

Issue #2: 'ping' command not found, how to resolve this ??

Solution: Go inside your container, run the following install command

apt update; apt install -y iputils-ping

What is the purpose of Docker Network?

- Containers need to communicate with external world (network)

- Reachable from external world to provide service (web applications must be accessable)

- Allow Containers to talk to Host machine (container to host)

- inter-container connectivity in same host and multi-host (container to container)

- Discover services provided by containers automatically (search other services)

- Load balance network traffic between many containers in a service (entry to containerization)

- Secure multi-tenant services (Cloud specific network capability)

Features of Docker network

- Type of network-specific network can be used for internet connectivity

- Publishing ports - open the access to services runs in a container

- DNS - Custom DNS setting Load balancing - allow, deny with iptables

- Traffic flow - application access Logging - where logs should go # check the list of docker networking

To explore docker network object related commands you can use :

docker network --help

Listing of docker default networks. It also shows three network driver options ready to use :

# There are 3 types of network drivers in Docker-CE Installation with local scoped(Single host) which are ready to use - bridge, host, null. There is the significance in each type of driver.

To check the network route table entries inside a container and on your Linux box where Docker Engine hosted:

Now, We are good to go for validate that from the con1 container, and check is it able to communicate with other container here it is con2. By using 'ping' command con2

The brctl command shows all the virtual ethernet cards attached to it, which are created for each docker container. Same thing will be visible for default bridge and also for the User defined bridge. There is the mapping of IP Address shows the bridge attached to container

|

| docker network help output |

Listing of docker default networks. It also shows three network driver options ready to use :

docker network ls

# There are 3 types of network drivers in Docker-CE Installation with local scoped(Single host) which are ready to use - bridge, host, null. There is the significance in each type of driver.

docker network create --driver bridge app-net docker network ls # To remove a docker network use rm docker network rm app-netLet's experiment with container con1

docker run -d -it --name con1 alpine

docker container exec -it con1 sh # able to communicate with outbound responses ping google.com

To check the network route table entries inside a container and on your Linux box where Docker Engine hosted:

ip route or ip r

Now, We are good to go for validate that from the con1 container, and check is it able to communicate with other container here it is con2. By using 'ping' command con2

Issue #3: The 'brctl' Linux command not found, how to fix this?

Bridge utility if brctl command is not found then install bridge-utils is the package, on the RHEL family OS then try the following:

yum -y install bridge-utils# check the bridge network and its associated virtual eth cards brctl show

|

| The 'brctl' show command output |

The brctl command shows all the virtual ethernet cards attached to it, which are created for each docker container. Same thing will be visible for default bridge and also for the User defined bridge. There is the mapping of IP Address shows the bridge attached to container

Default Bridge Network in Docker

Docker ecosystem provides by default this network, when you create first container it will be attached to this default network. You can validate this connection by using 'inspect' subcommand option with container or network.

The following experiment will let you understand the default bridge network that is already available when the docker daemon is in execution.

docker run -d -it --name con1 alpine docker run -d -it --name con2 alpine docker container ps docker network inspect bridge

|

| default bridge network connected with con1, con2 |

After looking into the inspect command out you will completely get an idea how the default bridge is working.

Now let's identify the containers con1 and con2

Now let's see inside the container with the same set of command to examine external connectivity, ability to connect other container with name, and with IP address as following:

Clean up of all the containers after the above experiment

Now we get the IP Addresses of the containers of both the networks, by using the 'docker inspect' command, ready for the final goal testing that is communication between the container in mynetwork1 to container in the mynetwork2 using ping command.

Docker overlay network will be part of Docker swarm or Kubernetes where you have Multi-Host Docker ecosystem.

Docker Overlay driver allows us not only multi-host communication, the overlay driver plugins that support it you can create multiple subnetworks.

Docker doc has an interesting Network summary same as it says --

Now let's identify the containers con1 and con2

docker exec -it con1 sh ip addr show ping -c 4 google.com ping -c 4 ipaddr-con2 # This you have to fetch and use ping -c 4 con2 # This should not work, that means dns will not work for default bridges exitNow this experiment is done, we can remove these containers which we tested so fare : `docker rm -v -f con1 con2`

User-defined Bridge Network

Docker allows use to create new bridge networks it is very simple which will have much more powerful than default network. Let's explore and understand user defined network named as 'mynetwork' it in detail here:

docker network create --driver bridge mynetwork docker network ls docker inspect mynetwork docker run -d -it --name con1 --network mynetwork alpine docker run -d -it --name con2 --network mynetwork alpine docker ps docker inspect mynetwork

Now let's see inside the container with the same set of command to examine external connectivity, ability to connect other container with name, and with IP address as following:

docker exec -it con1 sh ip addr show ping -c 4 google.com ping -c 4 ipaddr-con2 ping -c 4 con2 # This should WORKS, that means dns is avaialable in mynetwork exit

Clean up of all the containers after the above experiment

docker rm -v -f con1 con2 #cleanup

Network - Network communication

Docker networks are isolated to each other. Let us create 2 container in each network and try to communicate from their network to other network containers. To test this use case we will crate 2 networks say mynetwork1, mynetwork2 then, create 2 containers attached to corresponding to the networks. Find their IPAddress of each containers using 'inspect'. And then check the connection between two user-defined bridge networks.docker network create --driver bridge mynetwork1 docker network create --driver bridge mynetwork2 docker run -d -it --name con1 --network mynetwork1 alpine docker run -d -it --name con2 --network mynetwork1 alpine docker run -d -it --name con3 --network mynetwork2 alpine docker run -d -it --name con4 --network mynetwork2 alpine docker inspect mynetwork1 docker inspect mynetwork2

Now we get the IP Addresses of the containers of both the networks, by using the 'docker inspect' command, ready for the final goal testing that is communication between the container in mynetwork1 to container in the mynetwork2 using ping command.

docker exec -it con1 sh ping -c 4 ipaddr-con3 #get the ip address using docker container inspect con3 ping -c 4 con3 # This will not work because two bridges isolatedThis concludes that two user-defined networks are isolated to each other, they are private sub-nets.

Docker Host networking driver

The name itself tells that HOST, the network which container could have. To run a container with host use '--network host'. Now in the experiment of Host Network example

docker run --rm -d --network host --name my_nginx nginx curl localhostNow you know about how to run a container attached to a network, But now a container created already with default bridge network that can be attach to a custom bridge network is it possible ? is that have same IP address?

How to connect a user-defined network object with running Container?

We can move a docker container from default bridge network to a user defined bridge network it is possible. When this change happen the container IP address dynamically changes.docker network connect command is used to connect a running container to an existing user-defined bridge. The syntax as follows:

Example my_nginx already running container.

Observe after connecting to User defined bridge network check the IP Address of the container.

docker network connect [OPTIONS] NETWORK CONTAINER

Example my_nginx already running container.

docker run -dit --name ng2 \ --publish 8080:80 \ nginx:latest docker network connect mynetwork ng2

Observe after connecting to User defined bridge network check the IP Address of the container.

To disconnect use the following:

docker network disconnect mynetwork ng2Once the disconnected check the IP Address of the container ng2 using 'docker container inspect ug2'. This will concludes that container can be migrated from one network to other without stopping it. There is no errors while performing this.

Overlay Network for Multi-host

Docker overlay network will be part of Docker swarm or Kubernetes where you have Multi-Host Docker ecosystem.

docker network create --driver overlay net-overlay # above command fails with ERROR docker network lsThe overlay networks will be visible in the network list once you activate the Swarm on your Docker Engine. and for network overlay driver plugins that support it you can create multiple subnetworks. #if swarm not initialized use following command with the IP Address of your Docker Host

docker swarm init --advertise-addr 10.0.2.15Overlay Network will serve the containers association with Docker 'service' object instead of 'container' object. # scope of the overlay shows swarm

docker service create --help # Create service of nginx docker service create --network=net-overlay --name=app1 --replicas=4 ngnix docker service ls|grep app1 docker service inspect app1 |more # look for the VirtualIPs in th output

Docker Overlay driver allows us not only multi-host communication, the overlay driver plugins that support it you can create multiple subnetworks.

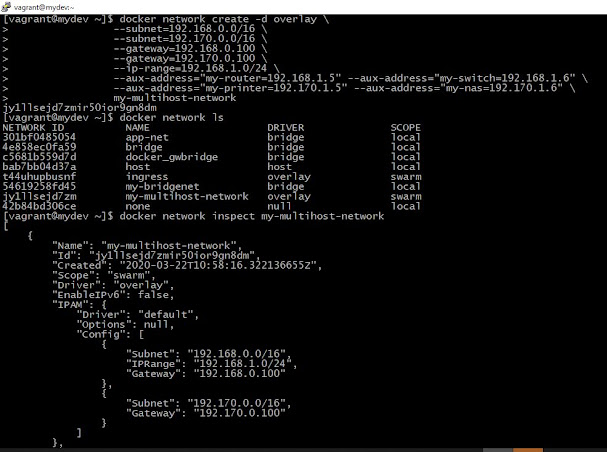

docker network create -d overlay \

--subnet=192.168.0.0/16 \

--subnet=192.170.0.0/16 \

--gateway=192.168.0.100 \

--gateway=192.170.0.100 \

--ip-range=192.168.1.0/24 \

--aux-address="my-router=192.168.1.5" --aux-address="my-switch=192.168.1.6" \

--aux-address="my-printer=192.170.1.5" --aux-address="my-nas=192.170.1.6" \

my-multihost-network

docker network ls

docker network inspect my-multihost-network # Check the subnets listed out of this command

The output as follows:- User-defined bridge networks are best when you need multiple containers to communicate on the same Docker host.

- Host networks are best when the network stack should not be isolated from the Docker host, but you want other aspects of the container to be isolated.

- Overlay networks are best when you need containers running on different Docker hosts to communicate, or when multiple applications work together using swarm services.

- MACvlan networks are best when you are migrating from a VM setup or need your containers to look like physical hosts on your network, each with a unique MAC address.

Reference:

- Docker Networking: https://success.docker.com/article/networking

- User-define bridge network : https://docs.docker.com/network/bridge/

- Docker Expose Ports externally: https://bobcares.com/blog/docker-port-expose/

Comments