HAProxy on Docker load balance Swarm Nginx web services

What is HAProxy do?

HAProxy is a free and open-source load balancer that enables DevOps/SRE professionals to distribute TCP-based traffic across many backend servers. And also it works for Layer 7 load balancer for HTTP loads.

HAProxy runs on Docker

The goal of this post is to learn more about HAProxy, how to configure with a set of web servers as backend. It will accept requests from HTTP protocol. Generally, it will use the default port 80 to serve the real-time application requirement. HAProxy supports multiple balancing methods. While doing this experiment |

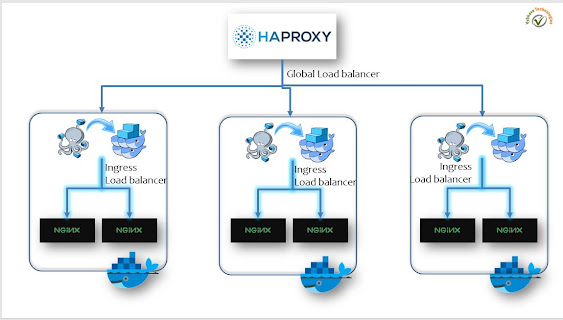

| Nginx web app load balance with Swarm Ingress to HA proxy |

In this post, we have two phases first prepare the web applications running on high availability that is using multiple Docker machines to form a Swarm Cluster and then a web application deployment done using the 'docker service' command.

Step 1: Create swarm Cluster on 3 machines using the following commands

docker swarm init --advertise-addr=192.168.0.10

join the nodes as per the above command output where you can find the docker join command

docker node ls

Deploy web service

Step 2: Deploying Special image based on Nginx web service that designed for a run on Swarm cluster

docker service create --name webapp1 --replicas=4 --publish 8090:80 fsoppelsa/swarm-nginx:latest

Now, check the service running on the multiple Swarm nodes

docker service ps webapp1

Load balancer Configuration

Step 3: Create a new Node 5 dedicated for HAProxy load balancing:

Step 4: Create the configuration file for the HAProxy load balancing the webapp1 running on Swarm nodes, In this experiment, I've modified a couple of times this haproxy.cfg file to get the port binding and to get the health stats of backend webapp1 servers.

vi /opt/haproxy/haproxy.cfg

global

daemon

log 127.0.0.1 local0 debug

maxconn 50000

defaults

log global

mode http

timeout client 5000

timeout server 5000

timeout connect 5000

listen health

bind :8000

http-request return status 200

option httpchk

listen stats

bind :7000

mode http

stats uri /

frontend main

bind *:7780

default_backend webapp1

backend webapp1

balance roundrobin

mode http

server node1 192.168.0.13:8090

server node2 192.168.0.12:8090

server node3 192.168.0.11:8090

server node4 192.168.0.10:8090

>

Save the file and we are good to proceed

Running HAProxy on Docker

The following docker run command will run as detached mode and ports will be published as per the HAProxy configuration, and this uses Docker storage volume for configuration file accessible from host machine to the container in read-only mode :

docker run -d --name my-haproxy \

-p 8880:7780 -p 7000:7000 \

-v /opt/haproxy:/usr/local/etc/haproxy:ro

haproxy

Troubleshoot hints

1. investigate what is there in haproxy container logs.

docker logs my-haproxy

alias drm='docker rm -v -f $(docker ps -aq)'

There are many changes in the HAProxy from 1.7 to latest version which I've encounterd and resolved with the following

Comments